Egocentric Perception and Embodied Intelligence

To achieve Embodied Intelligence, the AI model must learn to perceive, understand, and act within the world from a human-centric, first-person perspective. My central mission is to pioneer the field of Egocentric Perception for Embodied Intelligence, creating the foundational science and technology for assistive AI agents embedded in wearable devices and collaborative robots. These agents will be capable of seeing, thinking, memorizing, and planning, proactively assisting users in both daily activities and complex professional tasks. This vision directly supports Digital Transformation by engineering the next generation of human-AI collaboration. Furthermore, our work directly addresses one of the most critical societal challenges facing the world by developing the assistive technologies essential for ageing societies, thereby contributing to a sustainable future. Specifically, my research topics include:

- Egocentric Perception: Modeling the physical world and human mental states from a first-person view for intention-aware collaboration.

- 3D Vision and Representation Learning: Building efficient 3D models for actionable spatial reasoning and simulation.

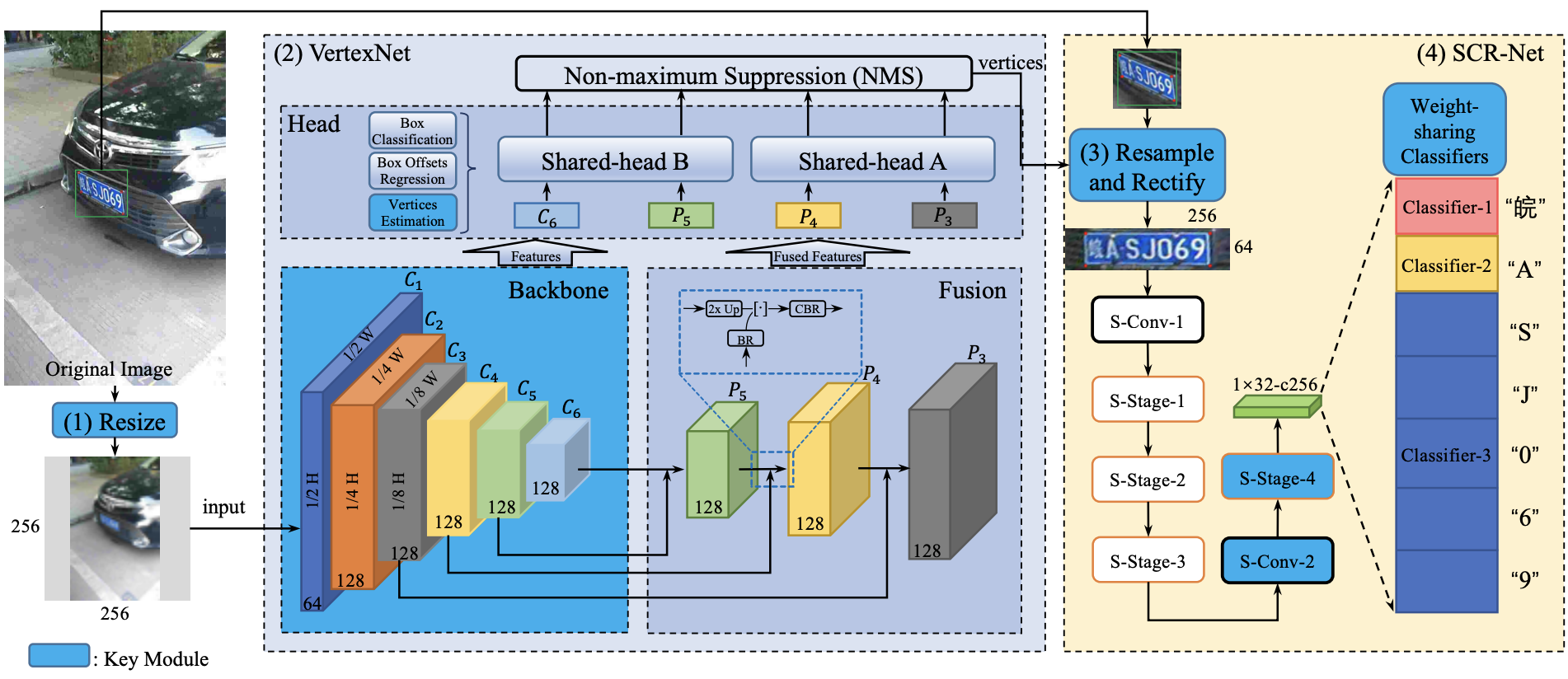

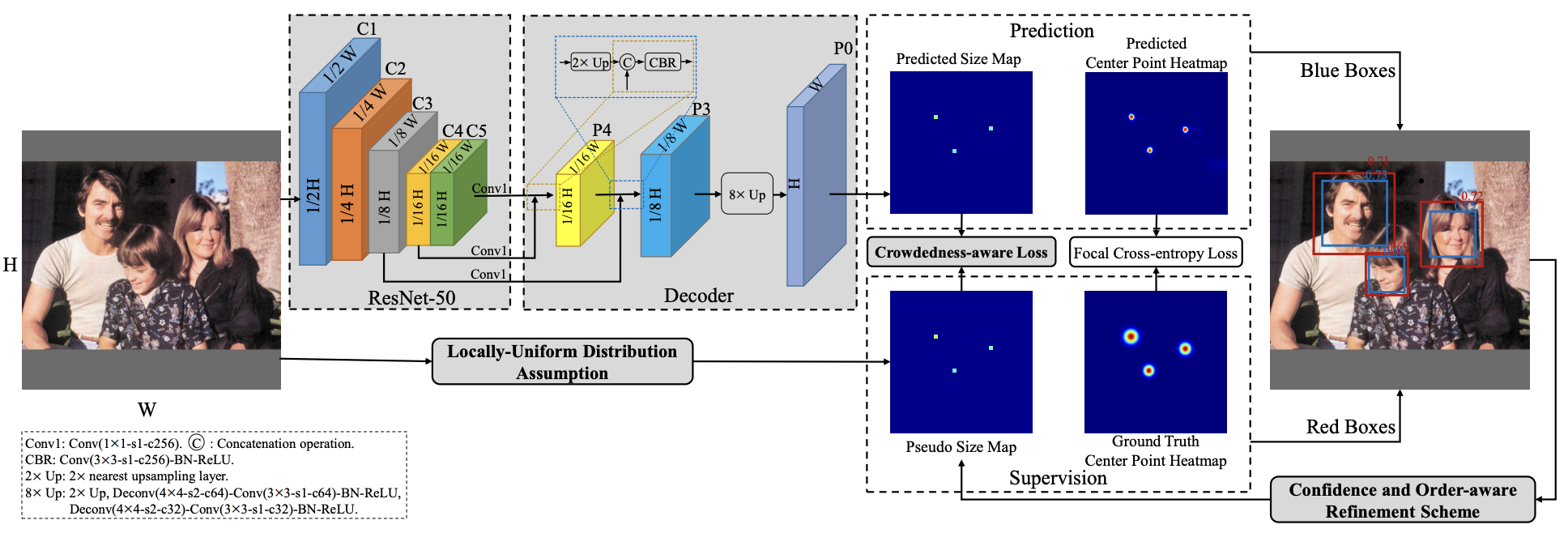

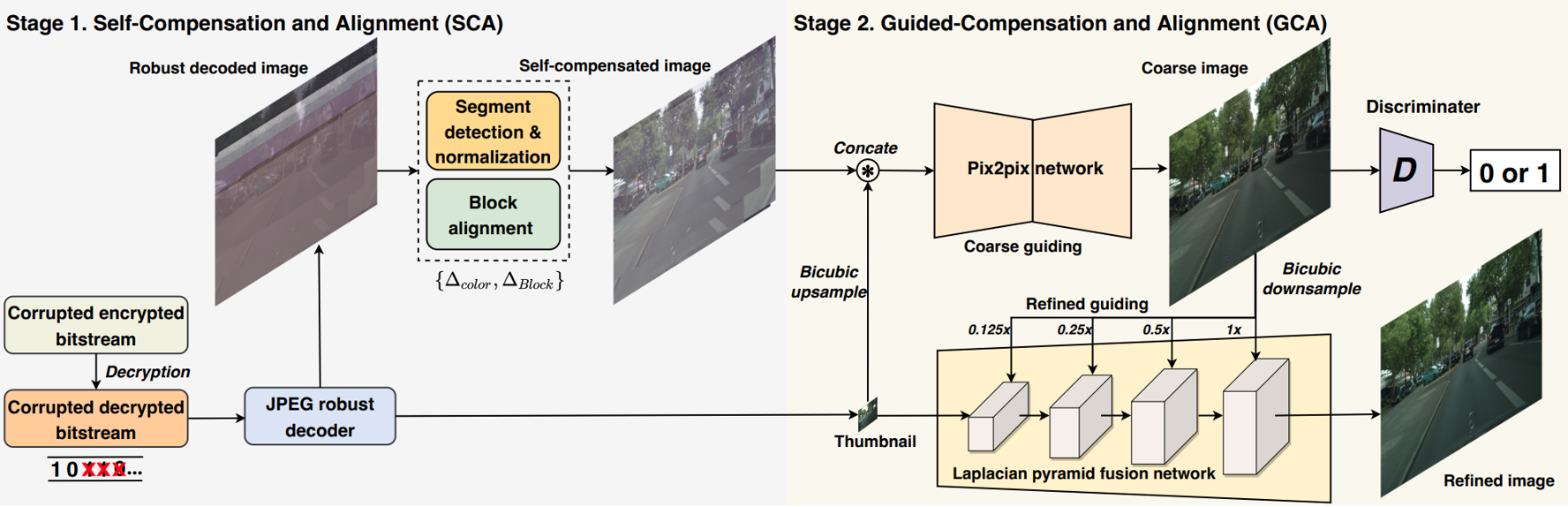

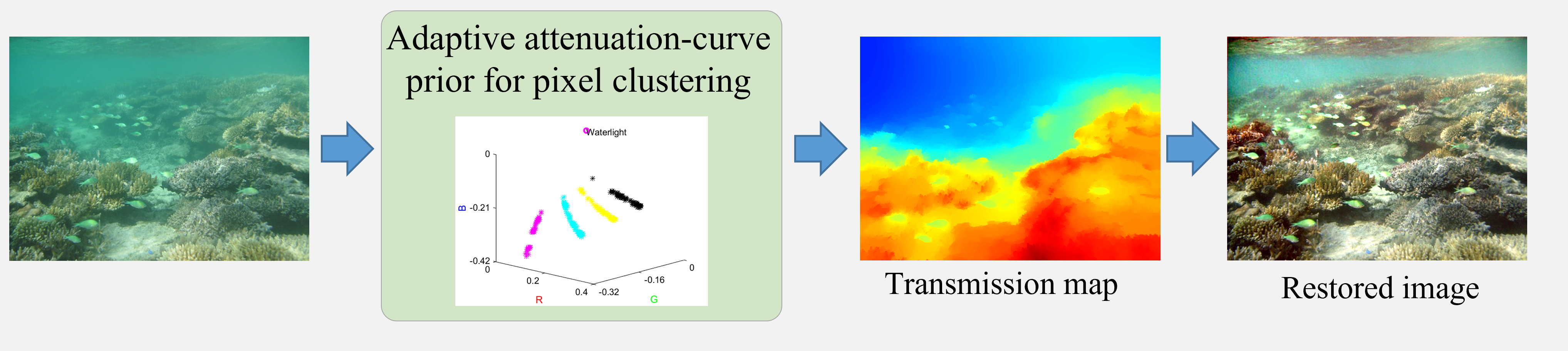

- Visual Information Processing: Infusing conventional image/video processing and analysis with new perspectives and novel viewpoints.

Research Grants

- Oct 2023 – Jun 2026, PolyU Start-Up Fund, PI: Visual Sensing, Restoration, and Analytics in Complex Scenes.

- Jun 2025 - May 2026, PolyU (non-UGC), PI: Open-world 3D Scene and Interaction from Egocentric Video.

- Jan 2025 - Dec 2025, PolyU EEE, Co-PI: EEE Special Research Topics 2025.

- Nov 2023 - Nov 2028, Team Leader, Supervisor/Co-supervisor: Jockey Club STEM Lab of Machine Learning and Computer Vision